AI and Machine Learning

FabVis - Development of a Machine Vision based Fabric Quality Inspection System

This project is to develop an automated fabric quality inspection machine using computer vision and machine learning. A prototype is being implemented using an array of industrial inspection cameras that are interfaced for parallel acquisition of images, image processing software on parallel processors, image classification using a state-of-the-art classifier and web based control, monitoring and reporting.

| Funding | This is an Accelerating Higher Education Expansion and Development Operation (AHEAD) Research, Innovation and Commercialization (RIC) granted project. |

| Datasets | A.P.P.S. Pathirana, Fabric Stain Dataset (link) |

| R.M.S. Ranathunga, G.P.P.D. Bandara, K.P.T.K. Bandara, R.A.T.K. Ranatunga, B.K.D.V. Vimarshana, Fabric Defect Dataset (link) | |

| Publications | C. D. Gamage, C. R. De Silva, G. H. S. I. Dharmaratna, P. S. H. Pallemulla, R. M. S. Ranathunga, R. A. S. K. Jayasena, R. M. K. V. Ratnayake, S. M. Kahawala, S. J. Sooriyaarachchi, "Method And Apparatus for Detecting Surface Defects," National Patent LK/P/21709, Apr 08, 2021 |

| C. D. Gamage, C. R. De Silva, G. H. S. I. Dharmaratna, P. S. H. Pallemulla, R. M. S. Ranathunga, S. J. Sooriyaarachchi, "Real-Time Autonomous Fabric Quality Inspection System Using Optical-Input-Based Multimodal Feature Detection and Self-Learning Classification," National Patent LK/P/20880, Nov 27, 2019 | |

| C. D. Gamage, C. R. De Silva, G. H. S. I. Dharmaratna, P. S. H. Pallemulla, R. M. S. Ranathunga, R. A. S. K. Jayasena, R. M. K. V. Ratnayake, S. M. Kahawala, S. J. Sooriyaarachchi, "Computer Vision Based Multi-Spectral Automatic Fabric Quality Inspection Machine With Physical Color Referencing," National ID LK/P/13468, Apr 09, 2021 | |

| Team | Mr. Suresh Dharmaratne |

| Mr. Kalana Ratnayake | |

| Ms. Shashikala Ranathunga | |

| Mr. Sajith Pallemulla | |

| Mr. Aruna Jayasena | |

| Mr. Sachin Kahawala | |

| Contact | Dr. S J Sooriyaarachchi (sulochanas@cse.mrt.ac.lk) |

| project description to be added | |

| Student | Mr. Suresh Dharmaratna |

| Technologies and Conventions | FLIR Gashopper3 industry grade image acquisition devices |

| Python | |

| Arduino | |

| project description to be added | |

| Student | Mr. Sajith Pallemulla |

| Technologies and Conventions | Tech 1 |

| Tech 2 | |

| project description to be added | |

| Student | Ms. Shashikala Ranathunga |

| Technologies and Conventions | Tech 1 |

| Tech 1 | |

Internet of Things

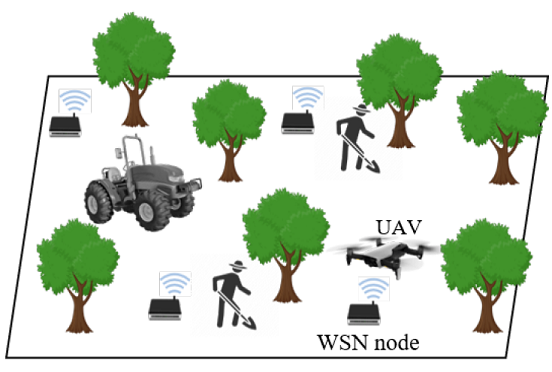

AgrIoT - Development of a Network of Soil Sensor and Drones

This project is to develop a network of wireless soil sensors for monitoring agricultural fields and to collect the sensor data using drones that fly at low altitude. An in-situ soil sensor is prototyped to gather pH, moisture, N, P, and K values of soil and calibrated against laboratory experiment results in collaboration with agricultural experts in Rajarata University of Sri Lanka. An autonomous drone is also being prototyped for dynamic and multiple obstacle detection and collision avoidance.

| Funding | This is a National Research Council (NRC) Investigator Driven (ID) granted multi-disciplinary project |

| Team | Ms. Dilini Pasqual |

| Mr. Nuwan Jayawardena | |

| Contact | Dr. S J Sooriyaarachchi (sulochanas@cse.mrt.ac.lk) |

|

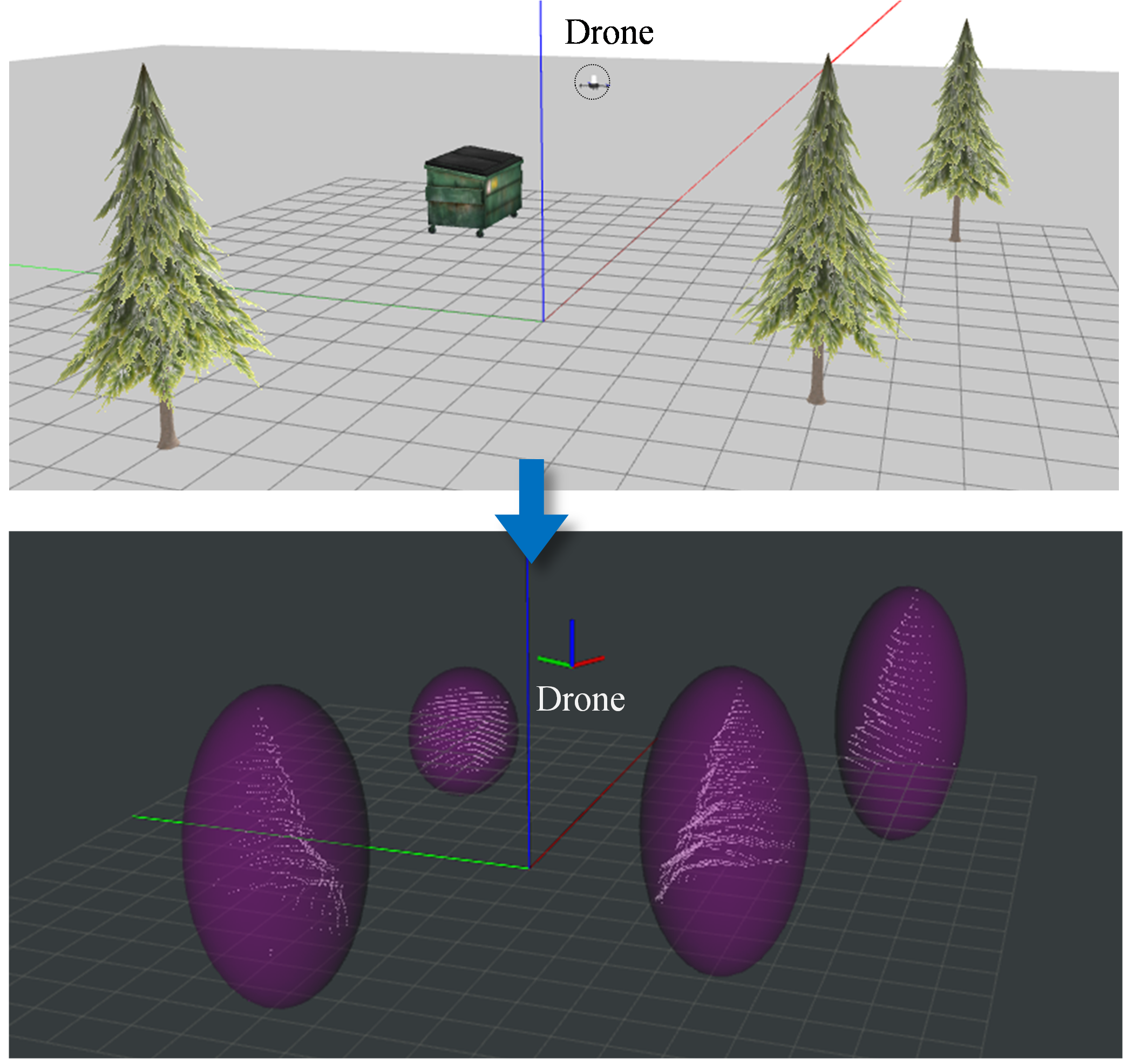

Drones used in precision agricultural applications are required to fly at low altitudes where a multitude of static and dynamic obstacles are present. Therefore, real-time perception, obstacle detection and avoidance are crucial to achieve fast reliable collision-free navigation in a cluttered and dynamic environment with multiple static and moving obstacles. Collision-free navigation of a drone in complex environments has been a widely studied research area throughout the past decade. However, most drone obstacle avoidance research assume that obstacles in the surrounding environment are either static or quasi-static. Therefore, this research project is being carried out to develop a dynamic obstacle avoidance system for low-altitude autonomous drones used in precision agricultural applications. The system consists of two main phases, namely, environmental modelling and collision avoidance. The environmental modelling phase is responsible for constructing a computer model that represents the obstacles and their dynamic parameters in the immediate environment of the drone. A simulation experimental test bed was setup and the results of the evaluation experiments verified the capability of the proposed ellipsoidal bounding box approximation-based method for representing the environment properly with a significant reduction in volume for real-world obstacles in comparison to the existing benchmark spherical bounding box approximation. Furthermore, the proposed ellipsoidal bounding box approximation method continues to perform better over benchmark spherical bounding box approximation when point clouds keep getting larger as the industry develops perception sensors of higher quality and performance. The collision avoidance phase is responsible for detecting collision risks, planning and performing collision-free navigation. This phase is currently being developed to achive fast reliable collision-free navigation in the presence of multiple static and dynamic obstacles. |

|

| Student | Ms. Dilini Pasqual |

| Technologies and Conventions | ROS |

| Gazebo | |

| C++ | |

| Python | |

| Pointcloud Library | |

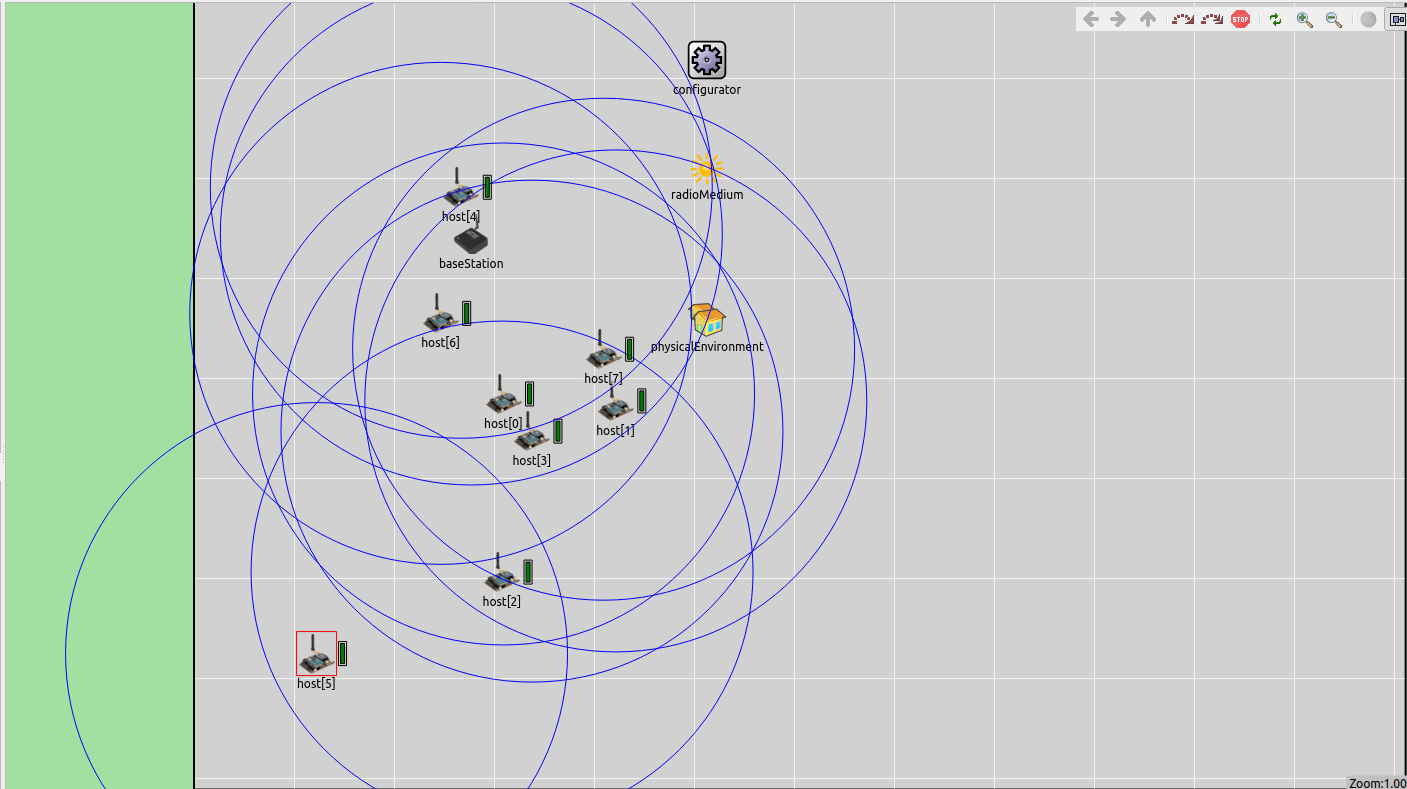

| A project in the domain of Precision Agriculture. It involves proposing an energy-efficient and fault-tolerant clustering protocol that can be used to operate an adhoc Wireless Sensor Network that is deployed in an agricultural setting. The initial implementation would be showcased via simulation and the final deployment will be done using actual hardware. | |

| Student | Mr. Nuwan Jayawardena |

| Technologies and Conventions | OMNET++ |

| INET | |

| C++ | |

| IEEE 802.15.4 | |

| Media | Simulation based Demonstration |

Robotics

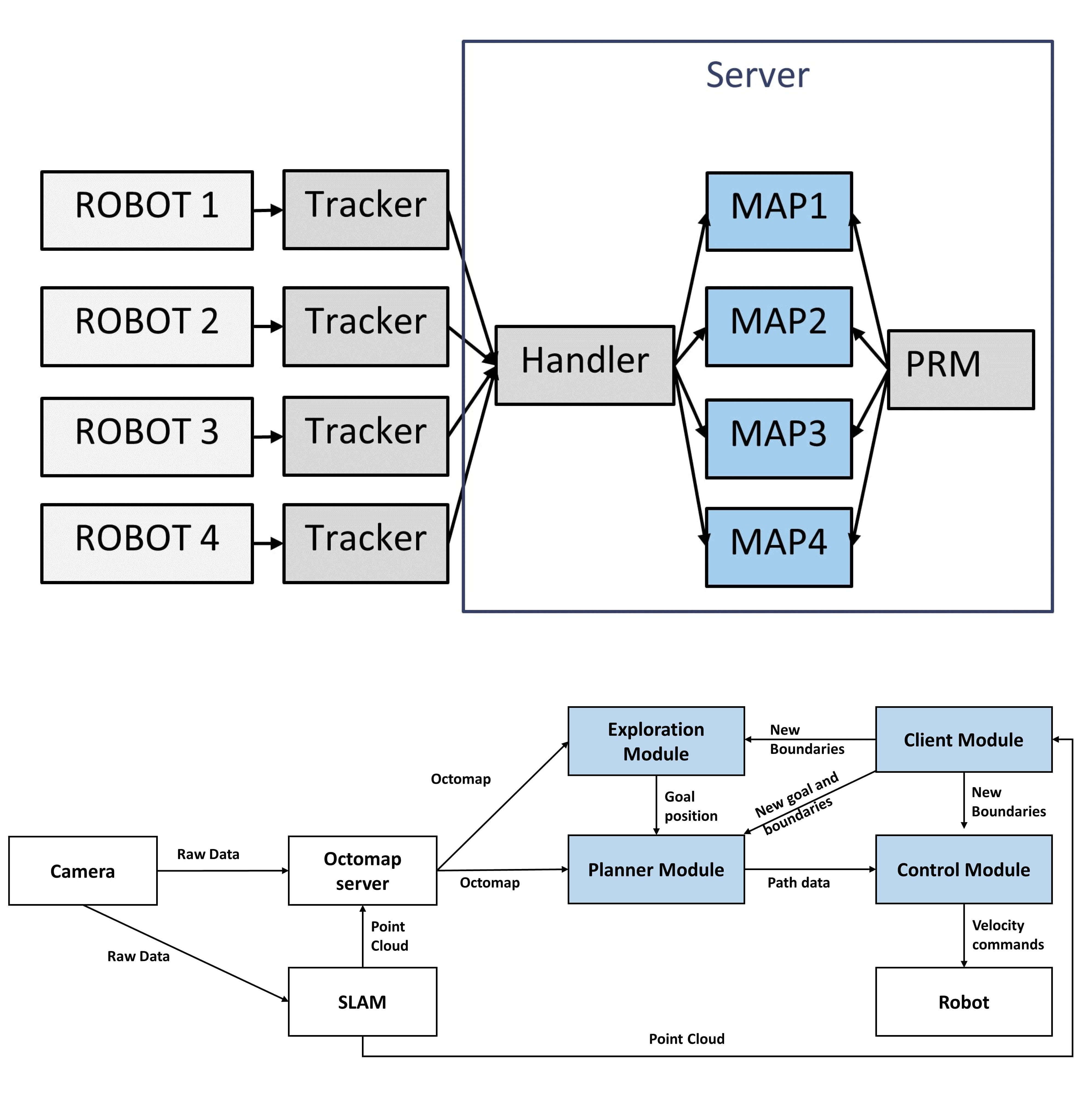

Xavier - Multi-robot 3D Area Exploration

This project is to develop a multi-robot system that collaboratively maps an arena in 3D. The individual robots autonomously navigate through the map to an optimal frontier for efficient area exploration. The system is designed to work without an initial map to suit application scenarios such as disaster rescue.

| Funding | This is an industry collaborated project. |

| Publication | R. M. K. V. Ratnayake, S. J. Sooriyaarachchi, C. D. Gamage, "OENS: An Octomap Based Exploration and Navigation System," 5th International Conference on Robotics and Automation Sciences (ICRAS 2021), pp. 230-234 (Awarded the Certificate for the Excellent Oral Presentation in the Session) (link) |

| C. D. Gamage, R. M. K. V. Ratnayake, S. J. Sooriyaarachchi, "A Robotic Device for Autonomous Navigation in Unstructured Cluttered Environment," National Patent LK/P/21836, Jun 28, 2021 | |

| Team | Mr. Kalana Ratnayake |

| Ms. Tharushi De Silva | |

| Contact | Dr. S J Sooriyaarachchi (sulochanas@cse.mrt.ac.lk) |

|

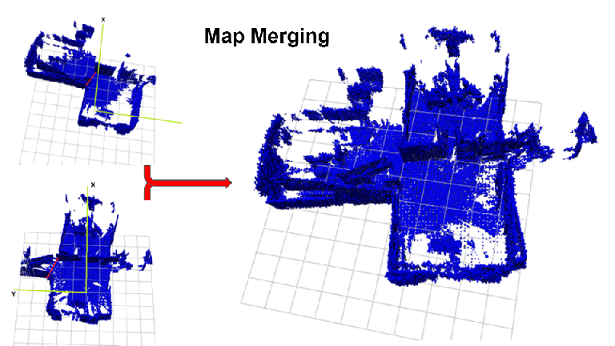

Exploration and navigation in unknown environments can be done individually or as a group of robots. The current state-of-the-art systems mainly use frontier detection-based exploration approaches based on occupancy grids and are available as either single robot systems or multi-robot systems. In this research, we propose a two-stage octomap-based exploration system for multi-robot systems that improve multi-robot coordinated exploration. We also present a prototype robotic system capable of exploring an unmapped area individually or while coordinating with other robots to complete the exploration fast and efficiently. During single robot exploration, the proposed system only uses the first stage of the two-stage system to evaluate the octomap of the environment. This stage utilizes the state of voxels to calculate target locations for navigation using a distance-based cost function. During multi-robot exploration, the proposed system uses both stages of the two-stage system to explore the given area. The second stage uses maps created by individual robots to create a merged map. The merged map can be used to evaluate the environment using octomaps to identify target locations for exploration and navigation. We have also proposed a performance evaluation criterion for exploration systems considering the robot’s operation time, power consumption, and stability. This criterion was used to evaluate the system and compare the performance of the individual robot system against the multi-robot system as well as against the state-of-the-art Explore-Lite system. Results of experiments show that the individual robot system proposed in this paper is about 38% faster than the Explore-Lite system, the multi-robot system using two robots is 48% faster than the individual robot system, and the multi-robot system using three robots is 38% faster than the individual robot system. |

|

| Student | Mr. Kalana Ratnayake |

| Technologies and Conventions | Robot Operating System (ROS) |

| Gazebo Physics Simulator | |

| Octomap Library | |

| PointCloud Library | |

| C++ | |

| Python | |

|

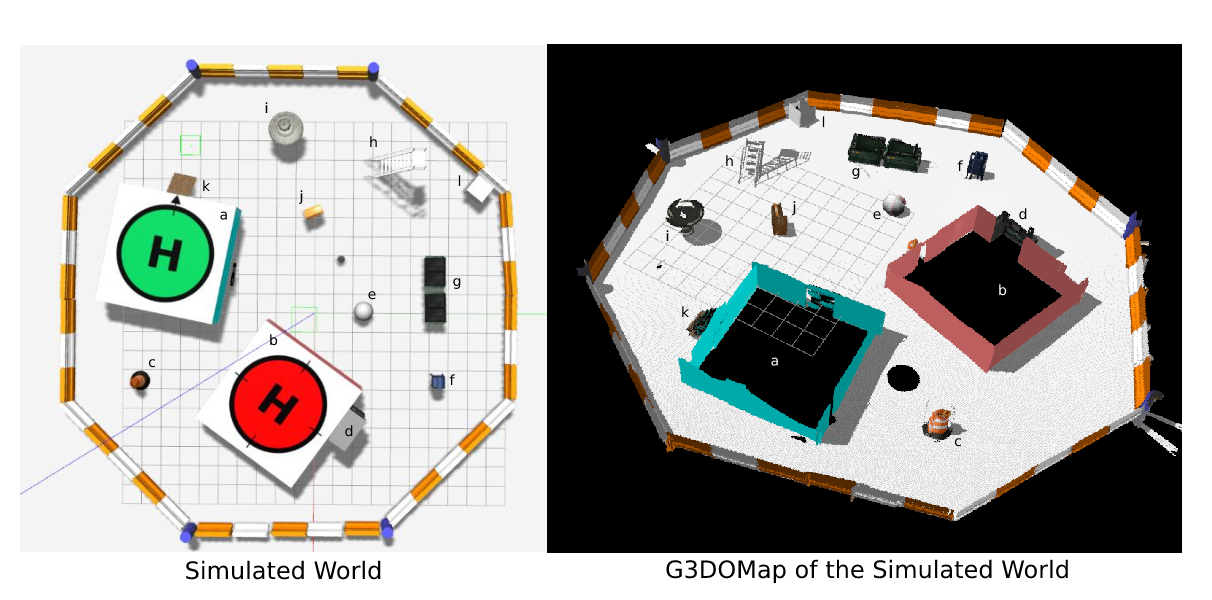

Autonomous multi-robot systems are a popular research field in the 3D mapping of unknown environments. High fault tolerance, increased accuracy, and low latency in coverage are the main reasons why a multi-robot system is preferred over a single robot in an unpredictable field. Compared with 3D scene reconstruction which is a conceptually similar but resource-wise different technique, autonomous mobile robot 3D mapping techniques are missing a crucial element. Since most mobile robots run on low computationally powered processing units, the real-time registration of point clouds into high-resolution 3D occupancy grid maps is a challenge. Until recently, it was nearly impossible to perform parallel point cloud registration in mobile platforms. Serial processing of a large amount of high-frequency input data leads to buffer overflows and failure to include all information into the 3D map. With the introduction of Graphical Processing Units (GPUs) into commodity hardware, mobile robot 3D mapping now can achieve faster time performance, using the same algorithmic techniques as 3D scene reconstruction. However, parallelization of mobile robot 3D occupancy grid mapping process is a less frequently discussed topic. As a Central Processing Unit (CPU) is necessary to run conventional middleware, operating system, and hardware drivers, the system is developed as a CPU-GPU mixed pipeline. The precomputed free scan mask is used to accelerate the process of identifying free voxels in space. Point positional information is transformed into unsinged integer coordinates to cope with Morton codes, which is a linear representation of octree nodes instead of traditional spatial octrees. 64-bit M-codes and 32-bit RGBO-codes are stored in a hash table to reduce access time compared to a hierarchical octree. Point cloud transformation, ray tracing, mapping point coordinated into integer scale, Morton-coded voxel generation, RGBO-code generation are the processes that are performed inside the GPU. Retrieving point cloud information, map update using bitwise operations and map publish are executed within the CPU. Additionally, a multi-robot system is prototyped as a team of wheeled robots autonomously exploring an unknown, even-surfaced environment, while building and merging fast 3D occupancy grid maps and communicating using a multi-master communication protocol. |

|

| Student | Ms. Tharushi De Silva |

| Technologies and Conventions | Robot Operating sytem |

| C++ | |

| CUDA Toolkit | |

| Gazebo Physics Simulator | |

|

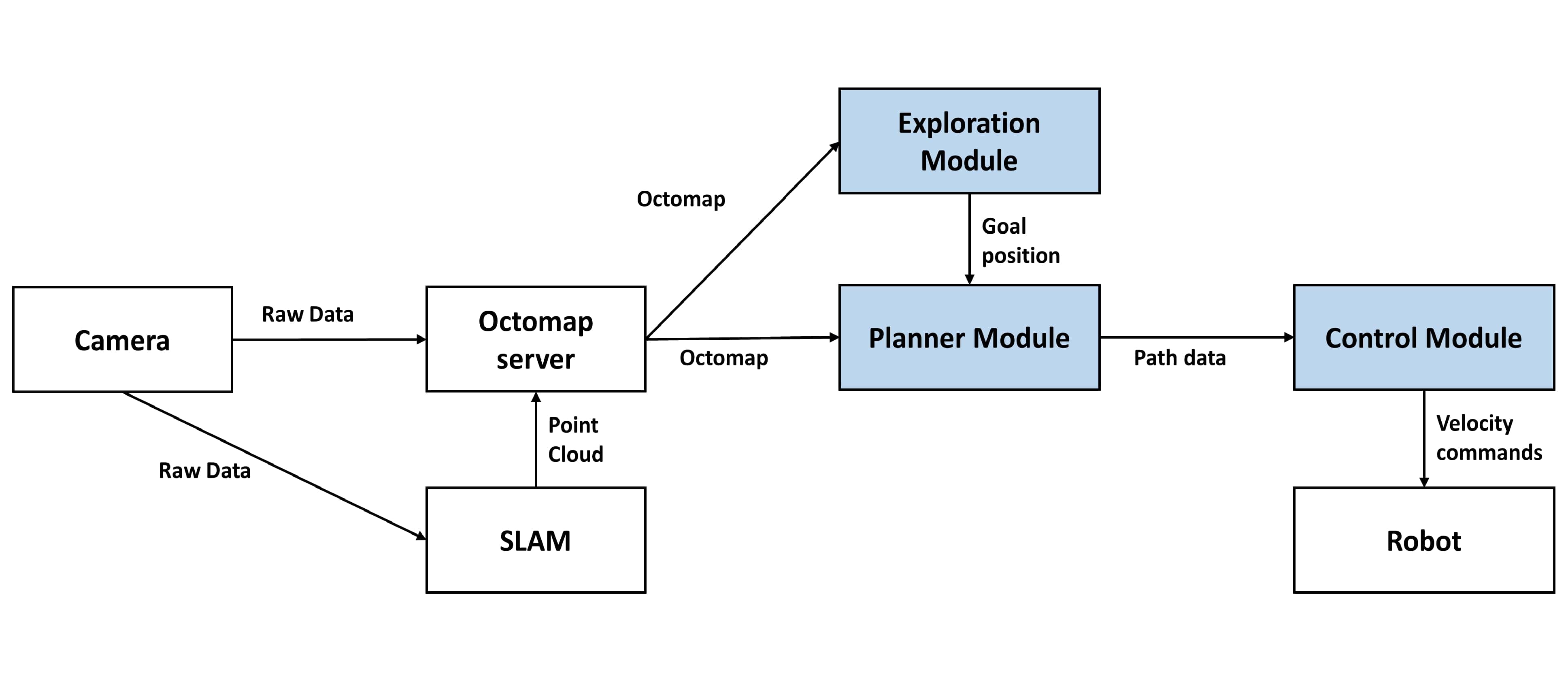

Autonomous navigation is one of the main research areas related to modern robotics. Unstructured enviroment navigation can be considered as one of the main branches of Autonomous navigation. The subsystem of the robot that is responsible for taking decisions related to navigation is called a motion planner. These systems analyze the environment, recognizes obstacles and calculate a path, which the robot can follow to achieve its goals. Recent research has been focusing on building motion planners that are capable of navigating in unstructured environments. To address the issue of navigating in an unstructured unknown terrain without prebuilt maps, I present a motion planner that is capable of planning a path for the robot to follow, using point clouds of the environment. This research focuses on building a motion planner consisting of a point cloud analyzer, a path planner and a velocity controller that is capable of finding a path in an unstructured area. This research also describes how the proposed motion planner can be used to explore and map an unstructured environment. |

|

| Student | Mr. Kalana Ratnayake |

| Technologies and Conventions | Robot Operating System (ROS) |

| Gazebo Physics Simulator | |

| Octomap Library | |

| C++ | |

| Python | |